David Graeber

Bullshit Jobs

A Theory

May 15, 2018, originally published.

Preface: On the Phenomenon of Bullshit Jobs

Chapter 1: What Is a Bullshit Job?

why a mafia hit man is not a good example of a bullshit job

on the common misconception that bullshit jobs are confined largely to the public sector

why hairdressers are a poor example of a bullshit job

What Sorts of Bullshit Jobs Are There?

the five major varieties of bullshit jobs

on complex multiform bullshit jobs

a word on second-order bullshit jobs

why many of our fundamental assumptions on human motivation appear to be incorrect

Chapter 4: What Is It Like to Have a Bullshit Job? (On Spiritual Violence, Part 2)

why having a bullshit job is not always necessarily that bad

on the misery of ambiguity and forced pretense

on the misery of not being a cause

on the misery of not feeling entitled to one’s misery

on the misery of knowing that one is doing harm

Chapter 5: Why Are Bullshit Jobs Proliferating?

a brief excursus on causality and the nature of sociological explanation

sundry notes on the role of government in creating and maintaining bullshit jobs

concerning some false explanations for the rise of bullshit jobs

why the financial industry might be considered a paradigm for bullshit job creation

conclusion, with a brief return to the question of three levels of causation

Chapter 6: Why Do We as a Society Not Object to the Growth of Pointless Employment?

on the impossibility of developing an absolute measure of value

on the theological roots of our attitudes toward labor

how the current crisis over robotization relates to the larger problem of bullshit jobs

To anyone who would rather be doing something useful with themselves.

Preface: On the Phenomenon of Bullshit Jobs

In the spring of 2013, I unwittingly set off a very minor international sensation.

It all began when I was asked to write an essay for a new radical magazine called Strike! The editor asked if I had anything provocative that no one else would be likely to publish. I usually have one or two essay ideas like that stewing around, so I drafted one up and presented him with a brief piece entitled “On the Phenomenon of Bullshit Jobs.”

The essay was based on a hunch. Everyone is familiar with those sort of jobs that don’t seem, to the outsider, to really do much of anything: HR consultants, communications coordinators, PR researchers, financial strategists, corporate lawyers, or the sort of people (very familiar in academic contexts) who spend their time staffing committees that discuss the problem of unnecessary committees. The list was seemingly endless. What, I wondered, if these jobs really are useless, and those who hold them are aware of it? Certainly you meet people now and then who seem to feel their jobs are pointless and unnecessary. Could there be anything more demoralizing than having to wake up in the morning five out of seven days of one’s adult life to perform a task that one secretly believed did not need to be performed—that was simply a waste of time or resources, or that even made the world worse? Would this not be a terrible psychic wound running across our society? Yet if so, it was one that no one ever seemed to talk about. There were plenty of surveys over whether people were happy at work. There were none, as far as I knew, about whether or not they felt their jobs had any good reason to exist.

This possibility that our society is riddled with useless jobs that no one wants to talk about did not seem inherently implausible. The subject of work is riddled with taboos. Even the fact that most people don’t like their jobs and would relish an excuse not to go to work is considered something that can’t really be admitted on TV—certainly not on the TV news, even if it might occasionally be alluded to in documentaries and stand-up comedy. I had experienced these taboos myself: I had once acted as the media liaison for an activist group that, rumor had it, was planning a civil disobedience campaign to shut down the Washington, DC, transport system as part of a protest against a global economic summit. In the days leading up to it, you could hardly go anywhere looking like an anarchist without some cheerful civil servant walking up to you and asking whether it was really true he or she wouldn’t have to go to work on Monday. Yet at the same time, TV crews managed dutifully to interview city employees—and I wouldn’t be surprised if some of them were the same city employees—commenting on how terribly tragic it would be if they wouldn’t be able to get to work, since they knew that’s what it would take to get them on TV. No one seems to feel free to say what they really feel about such matters—at least in public.

It was plausible, but I didn’t really know. In a way, I wrote the piece as a kind of experiment. I was interested to see what sort of response it would elicit.

This is what I wrote for the August 2013 issue:

On the Phenomenon of Bullshit Jobs

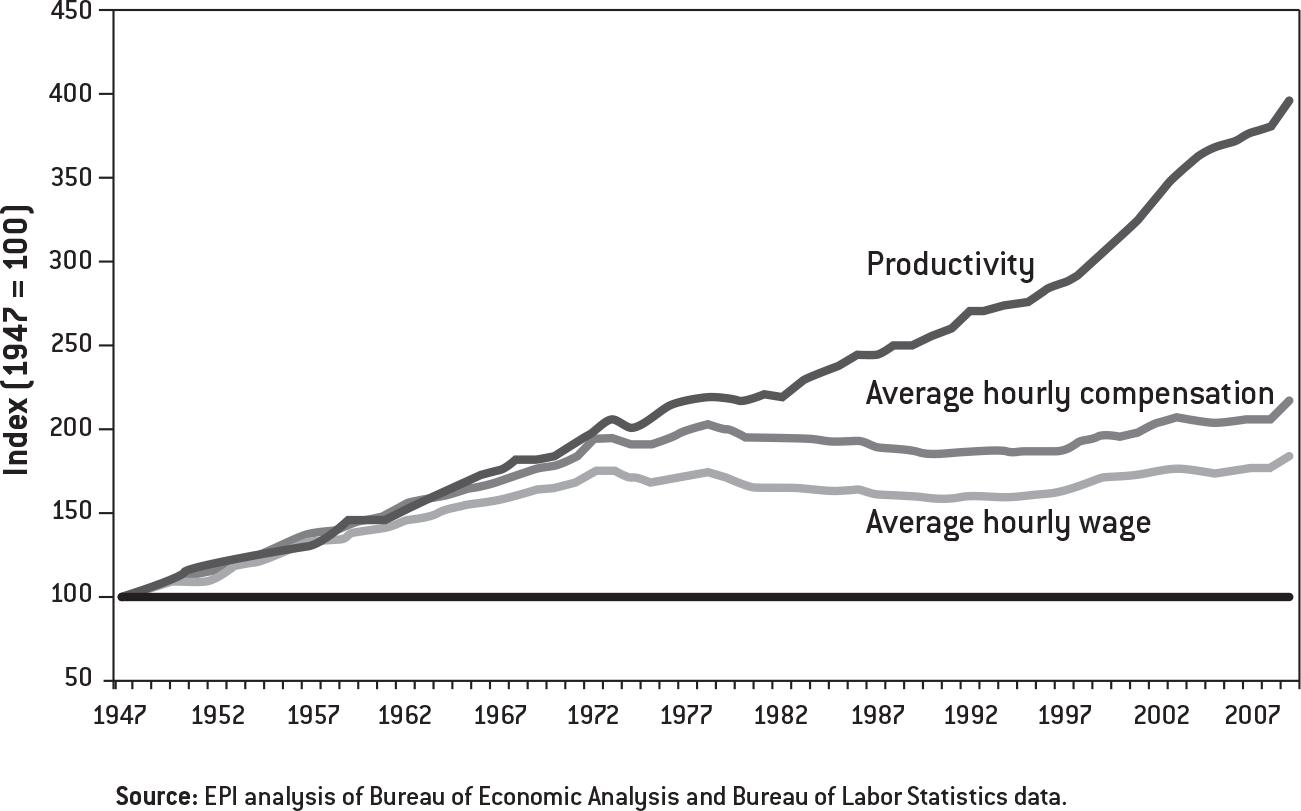

In the year 1930, John Maynard Keynes predicted that, by century’s end, technology would have advanced sufficiently that countries like Great Britain or the United States would have achieved a fifteen-hour work week. There’s every reason to believe he was right. In technological terms, we are quite capable of this. And yet it didn’t happen. Instead, technology has been marshaled, if anything, to figure out ways to make us all work more. In order to achieve this, jobs have had to be created that are, effectively, pointless. Huge swathes of people, in Europe and North America in particular, spend their entire working lives performing tasks they secretly believe do not really need to be performed. The moral and spiritual damage that comes from this situation is profound. It is a scar across our collective soul. Yet virtually no one talks about it.

Why did Keynes’s promised utopia—still being eagerly awaited in the sixties—never materialize? The standard line today is that he didn’t figure in the massive increase in consumerism. Given the choice between less hours and more toys and pleasures, we’ve collectively chosen the latter. This presents a nice morality tale, but even a moment’s reflection shows it can’t really be true. Yes, we have witnessed the creation of an endless variety of new jobs and industries since the twenties, but very few have anything to do with the production and distribution of sushi, iPhones, or fancy sneakers.

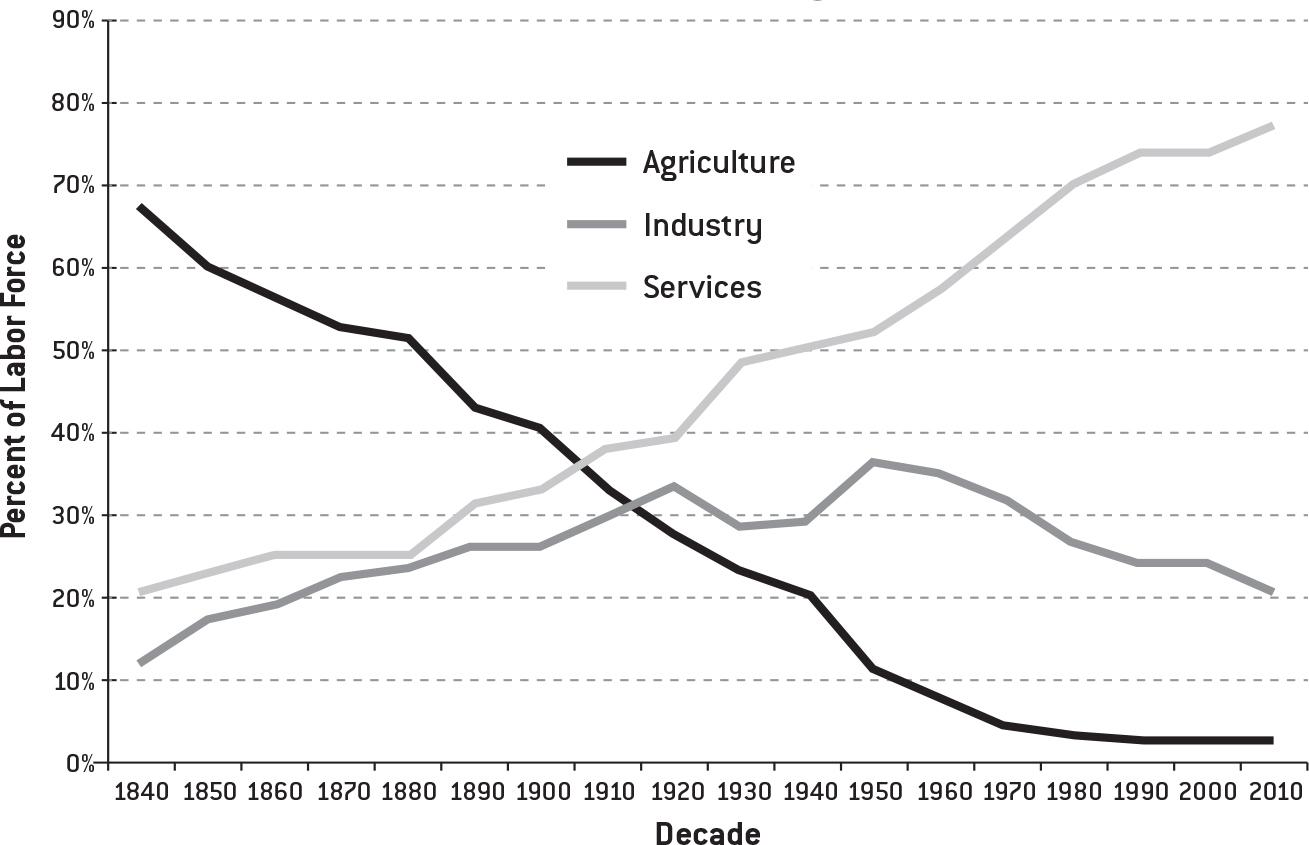

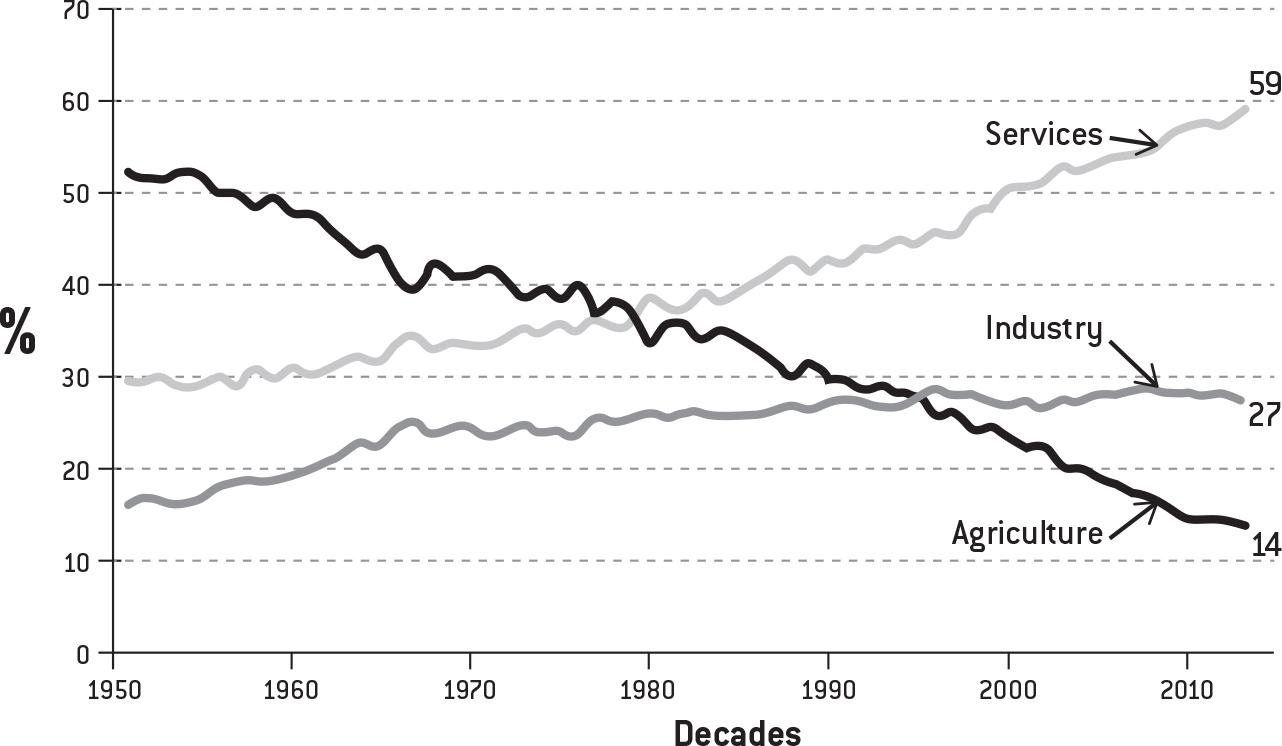

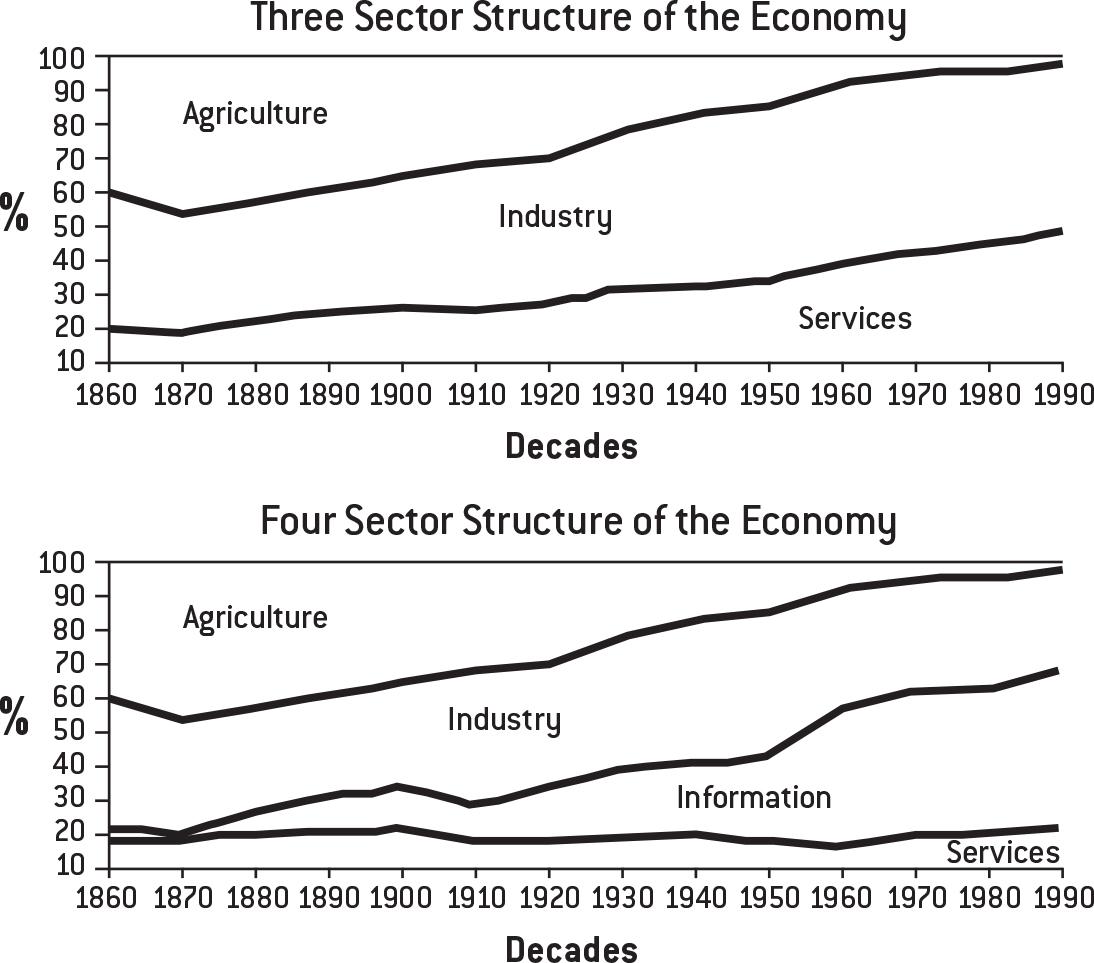

So what are these new jobs, precisely? A recent report comparing employment in the US between 1910 and 2000 gives us a clear picture (and I note, one pretty much exactly echoed in the UK). Over the course of the last century, the number of workers employed as domestic servants, in industry, and in the farm sector has collapsed dramatically. At the same time, “professional, managerial, clerical, sales, and service workers” tripled, growing “from one-quarter to three-quarters of total employment.” In other words, productive jobs have, just as predicted, been largely automated away. (Even if you count industrial workers globally, including the toiling masses in India and China, such workers are still not nearly so large a percentage of the world population as they used to be.)

But rather than allowing a massive reduction of working hours to free the world’s population to pursue their own projects, pleasures, visions, and ideas, we have seen the ballooning not even so much of the “service” sector as of the administrative sector, up to and including the creation of whole new industries like financial services or telemarketing, or the unprecedented expansion of sectors like corporate law, academic and health administration, human resources, and public relations. And these numbers do not even reflect all those people whose job is to provide administrative, technical, or security support for these industries, or, for that matter, the whole host of ancillary industries (dog washers, all-night pizza deliverymen) that only exist because everyone else is spending so much of their time working in all the other ones.

These are what I propose to call “bullshit jobs.”

It’s as if someone were out there making up pointless jobs just for the sake of keeping us all working. And here, precisely, lies the mystery. In capitalism, this is precisely what is not supposed to happen. Sure, in the old inefficient Socialist states like the Soviet Union, where employment was considered both a right and a sacred duty, the system made up as many jobs as it had to. (This is why in Soviet department stores it took three clerks to sell a piece of meat.) But, of course, this is the very sort of problem market competition is supposed to fix. According to economic theory, at least, the last thing a profit-seeking firm is going to do is shell out money to workers they don’t really need to employ. Still, somehow, it happens.

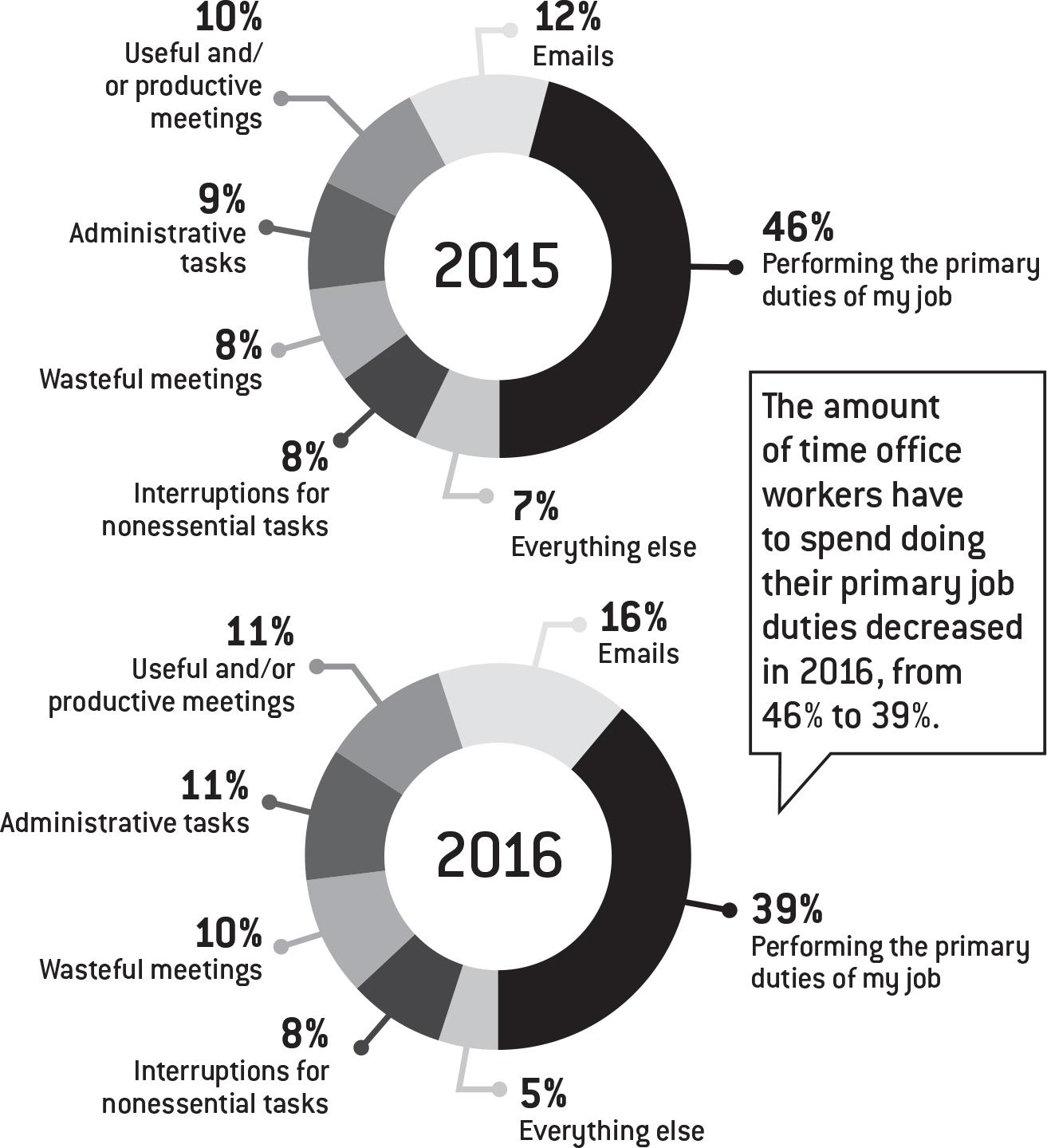

While corporations may engage in ruthless downsizing, the layoffs and speed-ups invariably fall on that class of people who are actually making, moving, fixing, and maintaining things. Through some strange alchemy no one can quite explain, the number of salaried paper pushers ultimately seems to expand, and more and more employees find themselves—not unlike Soviet workers, actually—working forty- or even fifty-hour weeks on paper but effectively working fifteen hours just as Keynes predicted, since the rest of their time is spent organizing or attending motivational seminars, updating their Facebook profiles, or downloading TV box sets.

The answer clearly isn’t economic: it’s moral and political. The ruling class has figured out that a happy and productive population with free time on their hands is a mortal danger. (Think of what started to happen when this even began to be approximated in the sixties.) And, on the other hand, the feeling that work is a moral value in itself, and that anyone not willing to submit themselves to some kind of intense work discipline for most of their waking hours deserves nothing, is extraordinarily convenient for them.

Once, when contemplating the apparently endless growth of administrative responsibilities in British academic departments, I came up with one possible vision of hell. Hell is a collection of individuals who are spending the bulk of their time working on a task they don’t like and are not especially good at. Say they were hired because they were excellent cabinetmakers, and then discover they are expected to spend a great deal of their time frying fish. Nor does the task really need to be done—at least, there’s only a very limited number of fish that need to be fried. Yet somehow they all become so obsessed with resentment at the thought that some of their coworkers might be spending more time making cabinets and not doing their fair share of the fish-frying responsibilities that before long, there’s endless piles of useless, badly cooked fish piling up all over the workshop, and it’s all that anyone really does.

I think this is actually a pretty accurate description of the moral dynamics of our own economy.

Now, I realize any such argument is going to run into immediate objections: “Who are you to say what jobs are really ‘necessary’? What’s ‘necessary,’ anyway? You’re an anthropology professor—what’s the ‘need’ for that?” (And, indeed, a lot of tabloid readers would take the existence of my job as the very definition of wasteful social expenditure.) And on one level, this is obviously true. There can be no objective measure of social value.

I would not presume to tell someone who is convinced they are making a meaningful contribution to the world that, really, they are not. But what about those people who are themselves convinced their jobs are meaningless? Not long ago, I got back in touch with a school friend whom I hadn’t seen since I was fifteen. I was amazed to discover that in the interim, he had become first a poet, then the front man in an indie rock band. I’d heard some of his songs on the radio, having no idea the singer was someone I actually knew. He was obviously brilliant, innovative, and his work had unquestionably brightened and improved the lives of people all over the world. Yet, after a couple of unsuccessful albums, he’d lost his contract, and, plagued with debts and a newborn daughter, ended up, as he put it, “taking the default choice of so many directionless folk: law school.” Now he’s a corporate lawyer working in a prominent New York firm. He was the first to admit that his job was utterly meaningless, contributed nothing to the world, and, in his own estimation, should not really exist.

There’s a lot of questions one could ask here, starting with, What does it say about our society that it seems to generate an extremely limited demand for talented poet-musicians but an apparently infinite demand for specialists in corporate law? (Answer: If 1 percent of the population controls most of the disposable wealth, what we call “the market” reflects what they think is useful or important, not anybody else.) But even more, it shows that most people in pointless jobs are ultimately aware of it. In fact, I’m not sure I’ve ever met a corporate lawyer who didn’t think their job was bullshit. The same goes for almost all the new industries outlined above. There is a whole class of salaried professionals that, should you meet them at parties and admit that you do something that might be considered interesting (an anthropologist, for example), will want to avoid even discussing their line of work entirely. Give them a few drinks, and they will launch into tirades about how pointless and stupid their job really is.

This is a profound psychological violence here. How can one even begin to speak of dignity in labor when one secretly feels one’s job should not exist? How can it not create a sense of deep rage and resentment? Yet it is the peculiar genius of our society that its rulers have figured out a way, as in the case of the fish fryers, to ensure that rage is directed precisely against those who actually do get to do meaningful work. For instance: in our society, there seems to be a general rule that, the more obviously one’s work benefits other people, the less one is likely to be paid for it. Again, an objective measure is hard to find, but one easy way to get a sense is to ask: What would happen were this entire class of people to simply disappear? Say what you like about nurses, garbage collectors, or mechanics, it’s obvious that were they to vanish in a puff of smoke, the results would be immediate and catastrophic. A world without teachers or dockworkers would soon be in trouble, and even one without science-fiction writers or ska musicians would clearly be a lesser place. It’s not entirely clear how humanity would suffer were all private equity CEOs, lobbyists, PR researchers, actuaries, telemarketers, bailiffs, or legal consultants to similarly vanish.[1] (Many suspect it might improve markedly.) Yet apart from a handful of well-touted exceptions (doctors), the rule holds surprisingly well.

Even more perverse, there seems to be a broad sense that this is the way things should be. This is one of the secret strengths of right-wing populism. You can see it when tabloids whip up resentment against tube workers for paralyzing London during contract disputes: the very fact that tube workers can paralyze London shows that their work is actually necessary, but this seems to be precisely what annoys people. It’s even clearer in the United States, where Republicans have had remarkable success mobilizing resentment against schoolteachers and autoworkers (and not, significantly, against the school administrators or auto industry executives who actually cause the problems) for their supposedly bloated wages and benefits. It’s as if they are being told “But you get to teach children! Or make cars! You get to have real jobs! And on top of that, you have the nerve to also expect middle-class pensions and health care?”

If someone had designed a work regime perfectly suited to maintaining the power of finance capital, it’s hard to see how he or she could have done a better job. Real, productive workers are relentlessly squeezed and exploited. The remainder are divided between a terrorized stratum of the universally reviled unemployed and a larger stratum who are basically paid to do nothing, in positions designed to make them identify with the perspectives and sensibilities of the ruling class (managers, administrators, etc.)—and particularly its financial avatars—but, at the same time, foster a simmering resentment against anyone whose work has clear and undeniable social value. Clearly, the system was never consciously designed. It emerged from almost a century of trial and error. But it is the only explanation for why, despite our technological capacities, we are not all working three- to four-hour days.

If ever an essay’s hypothesis was confirmed by its reception, this was it. “On the Phenomenon of Bullshit Jobs” produced an explosion.

The irony was that the two weeks after the piece came out were the same two weeks that my partner and I had decided to spend with a basket of books, and each other, in a cabin in rural Quebec. We’d made a point of finding a location with no wireless. This left me in the awkward position of having to observe the results only on my mobile phone. The essay went viral almost immediately. Within weeks, it had been translated into at least a dozen languages, including German, Norwegian, Swedish, French, Czech, Romanian, Russian, Turkish, Latvian, Polish, Greek, Estonian, Catalan, and Korean, and was reprinted in newspapers from Switzerland to Australia. The original Strike! page received more than a million hits and crashed repeatedly from too much traffic. Blogs sprouted. Comments sections filled up with confessions from white-collar professionals; people wrote me asking for guidance or to tell me I had inspired them to quit their jobs to find something more meaningful. Here is one enthusiastic response (I’ve collected hundreds) from the comments section of Australia’s Canberra Times:

Wow! Nail on the head! I am a corporate lawyer (tax litigator, to be specific). I contribute nothing to this world and am utterly miserable all of the time. I don’t like it when people have the nerve to say “Why do it, then?” because it is so clearly not that simple. It so happens to be the only way right now for me to contribute to the 1 percent in such a significant way so as to reward me with a house in Sydney to raise my future kids… Thanks to technology, we are probably as productive in two days as we previously were in five. But thanks to greed and some busy-bee syndrome of productivity, we are still asked to slave away for the profit of others ahead of our own nonremunerated ambitions. Whether you believe in intelligent design or evolution, humans were not made to work—so to me, this is all just greed propped up by inflated prices of necessities.[2]

At one point, I got a message from one anonymous fan who said that he was part of an impromptu group circulating the piece within the financial services community; he’d received five emails containing the essay just that day (certainly one sign that many in financial services don’t have much to do). None of this answered the question of how many people really felt that way about their jobs—as opposed to, say, passing on the piece as a way to drop subtle hints to others—but before long, statistical evidence did indeed surface.

On January 5, 2015, a little more than a year after the article came out, on the first Monday of the new year—that is, the day most Londoners were returning to work from their winter holidays—someone took several hundred ads in London Underground cars and replaced them with a series of guerrilla posters consisting of quotes from the original essay. These were the ones they chose:

-

Huge swathes of people spend their days performing tasks they secretly believe do not really need to be performed.

-

It’s as if someone were out there making up pointless jobs for the sake of keeping us all working.

-

The moral and spiritual damage that comes from this situation is profound. It is a scar across our collective soul. Yet virtually no one talks about it.

-

How can one even begin to speak of dignity in labor when one secretly feels one’s job should not exist?

The response to the poster campaign was another spate of discussion in the media (I appeared briefly on Russia Today), as a result of which the polling agency YouGov took it upon itself to test the hypothesis and conducted a poll of Britons using language taken directly from the essay: for example, Does your job “make a meaningful contribution to the world”? Astonishingly, more than a third—37 percent—said they believed that it did not (whereas 50 percent said it did, and 13 percent were uncertain).

This was almost twice what I had anticipated—I’d imagined the percentage of bullshit jobs was probably around 20 percent. What’s more, a later poll in Holland came up with almost exactly the same results: in fact, a little higher, as 40 percent of Dutch workers reported that their jobs had no good reason to exist.

So not only has the hypothesis been confirmed by public reaction, it has now been overwhelmingly confirmed by statistical research.

Clearly, then, we have an important social phenomenon that has received almost no systematic attention.[3] Simply opening up a way to talk about it became, for many, cathartic. It was obvious that a larger exploration was in order.

What I want to do here is a bit more systematic than the original essay. The 2013 piece was for a magazine about revolutionary politics, and it emphasized the political implications of the problem. In fact, the essay was just one of a series of arguments I was developing at the time that the neoliberal (“free market”) ideology that had dominated the world since the days of Thatcher and Reagan was really the opposite of what it claimed to be; it was really a political project dressed up as an economic one.

I had come to this conclusion because it seemed to be the only way to explain how those in power actually behaved. While neoliberal rhetoric was always all about unleashing the magic of the marketplace and placing economic efficiency over all other values, the overall effect of free market policies has been that rates of economic growth have slowed pretty much everywhere except India and China; scientific and technological advance has stagnated; and in most wealthy countries, the younger generations can, for the first time in centuries, expect to lead less prosperous lives than their parents did. Yet on observing these effects, proponents of market ideology always reply with calls for even stronger doses of the same medicine, and politicians duly enact them. This struck me as odd. If a private company hired a consultant to come up with a business plan, and it resulted in a sharp decline in profits, that consultant would be fired. At the very least, he’d be asked to come up with a different plan. With free market reforms, this never seemed to happen. The more they failed, the more they were enacted. The only logical conclusion was that economic imperatives weren’t really driving the project.

What was? It seemed to me the answer had to lie in the mind-set of the political class. Almost all of those making the key decisions had attended college in the 1960s, when campuses were at the very epicenter of political ferment, and they felt strongly that such things must never happen again. As a result, while they might have been concerned with declining economic indicators, they were also quite delighted to note that the combination of globalization, gutting the power of unions, and creating an insecure and overworked workforce—along with aggressively paying lip service to sixties calls to hedonistic personal liberation (what came to be known as “lifestyle liberalism, fiscal conservativism”)—had the effect of simultaneously shifting more and more wealth and power to the wealthy and almost completely destroying the basis for organized challenges to their power. It might not have worked very well economically, but politically it worked like a dream. If nothing else, they had little incentive to abandon such policies. All I did in the essay was to pursue this insight: whenever you find someone doing something in the name of economic efficiency that seems completely economically irrational (like, say, paying people good money to do nothing all day), one had best start by asking, as the ancient Romans did, “Qui bono?”—“Who benefits?”—and how.

This is less a conspiracy theory approach than it is an anticonspiracy theory. I was asking why action wasn’t taken. Economic trends happen for all sorts of reasons, but if they cause problems for the rich and powerful, those rich and powerful people will pressure institutions to step in and do something about the matter. This is why after the financial crisis of 2008–09, large investment banks were bailed out but ordinary mortgage holders weren’t. The proliferation of bullshit jobs, as we’ll see, happened for a variety of reasons. The real question I was asking is why no one intervened (“conspired,” if you like) to do something about the matter.

In this book I want to do considerably more than that.

I believe that the phenomenon of bullshit employment can provide us with a window on much deeper social problems. We need to ask ourselves, not just how did such a large proportion of our workforce find themselves laboring at tasks that they themselves consider pointless, but also why do so many people believe this state of affairs to be normal, inevitable—even desirable? More oddly still, why, despite the fact that they hold these opinions in the abstract, and even believe that it is entirely appropriate that those who labor at pointless jobs should be paid more and receive more honor and recognition than those who do something they consider to be useful, do they nonetheless find themselves depressed and miserable if they themselves end up in positions where they are being paid to do nothing, or nothing that they feel benefits others in any way? There is clearly a jumble of contradictory ideas and impulses at play here. One thing I want to do in this book is begin to sort them out. This will mean asking practical questions such as: How do bullshit jobs actually happen? It will also mean asking deep historical questions, like, When and how did we come to believe that creativity was supposed to be painful, or, how did we ever come up with the notion that it would be possible to sell one’s time? And finally, it will mean asking fundamental questions about human nature.

Writing this book also serves a political purpose.

I would like this book to be an arrow aimed at the heart of our civilization. There is something very wrong with what we have made ourselves. We have become a civilization based on work—not even “productive work” but work as an end and meaning in itself. We have come to believe that men and women who do not work harder than they wish at jobs they do not particularly enjoy are bad people unworthy of love, care, or assistance from their communities. It is as if we have collectively acquiesced to our own enslavement. The main political reaction to our awareness that half the time we are engaged in utterly meaningless or even counterproductive activities—usually under the orders of a person we dislike—is to rankle with resentment over the fact there might be others out there who are not in the same trap. As a result, hatred, resentment, and suspicion have become the glue that holds society together. This is a disastrous state of affairs. I wish it to end.

If this book can in any way contribute to that end, it will have been worth writing.

Chapter 1: What Is a Bullshit Job?

Let us begin with what might be considered a paradigmatic example of a bullshit job.

Kurt works for a subcontractor for the German military. Or… actually, he is employed by a subcontractor of a subcontractor of a subcontractor for the German military. Here is how he describes his work:

The German military has a subcontractor that does their IT work.

The IT firm has a subcontractor that does their logistics.

The logistics firm has a subcontractor that does their personnel management, and I work for that company.

Let’s say soldier A moves to an office two rooms farther down the hall. Instead of just carrying his computer over there, he has to fill out a form.

The IT subcontractor will get the form, people will read it and approve it, and forward it to the logistics firm.

The logistics firm will then have to approve the moving down the hall and will request personnel from us.

The office people in my company will then do whatever they do, and now I come in.

I get an email: “Be at barracks B at time C.” Usually these barracks are one hundred to five hundred kilometers [62–310 miles] away from my home, so I will get a rental car. I take the rental car, drive to the barracks, let dispatch know that I arrived, fill out a form, unhook the computer, load the computer into a box, seal the box, have a guy from the logistics firm carry the box to the next room, where I unseal the box, fill out another form, hook up the computer, call dispatch to tell them how long I took, get a couple of signatures, take my rental car back home, send dispatch a letter with all of the paperwork and then get paid.

So instead of the soldier carrying his computer for five meters, two people drive for a combined six to ten hours, fill out around fifteen pages of paperwork, and waste a good four hundred euros of taxpayers’ money.[4]

This might sound like a classic example of ridiculous military red tape of the sort Joseph Heller made famous in his 1961 novel Catch-22, except for one key element: almost nobody in this story actually works for the military. Technically, they’re all part of the private sector. There was a time, of course, when any national army also had its own communications, logistics, and personnel departments, but nowadays it all has to be done through multiple layers of private outsourcing.

Kurt’s job might be considered a paradigmatic example of a bullshit job for one simple reason: if the position were eliminated, it would make no discernible difference in the world. Likely as not, things would improve, since German military bases would presumably have to come up with a more reasonable way to move equipment. Crucially, not only is Kurt’s job absurd, but Kurt himself is perfectly well aware of this. (In fact, on the blog where he posted this story, he ended up defending the claim that the job served no purpose against a host of free market enthusiasts who popped up instantly—as free market enthusiasts tend to do on internet forums—to insist that since his job was created by the private sector, it by definition had to serve a legitimate purpose.)

This I consider the defining feature of a bullshit job: one so completely pointless that even the person who has to perform it every day cannot convince himself there’s a good reason for him to be doing it. He might not be able to admit this to his coworkers—often there are very good reasons not to do so. But he is convinced the job is pointless nonetheless.

So let this stand as an initial provisional definition:

Provisional Definition: a bullshit job is a form of employment that is so completely pointless, unnecessary, or pernicious that even the employee cannot justify its existence.

Some jobs are so pointless that no one even notices if the person who has the job vanishes. This usually happens in the public sector:

Spanish Civil Servant Skips Work for Six Years to Study Spinoza

—Jewish Times, February 26, 2016

A Spanish civil servant who collected a salary for at least six years without working used the time to become an expert on the writings of Jewish philosopher Baruch Spinoza, Spanish media reported.

A court in Cadiz in southern Spain last month ordered Joaquin Garcia, sixty-nine, to pay approximately $30,000 in fines for failing to show up for work at the water board, Agua de Cadiz, where Garcia was employed as an engineer since 1996, the news site euronews.com reported last week.

His absence was first noticed in 2010, when Garcia was due to receive a medal for long service. Deputy Mayor Jorge Blas Fernandez began making inquiries that led him to discover that Garcia had not been seen at his office in six years.

Reached by the newspaper El Mundo, unnamed sources close to Garcia said he devoted himself in the years before 2010 to studying the writings of Spinoza, a seventeenth-century heretic Jew from Amsterdam. One source interviewed by El Mundo said Garcia became an expert on Spinoza but denied claims Garcia never showed up for work, saying he came in at irregular times.[5]

This story made headlines in Spain. At a time when the country was undergoing severe austerity and high unemployment, it seemed outrageous that there were civil servants who could skip work for years without anybody noticing. Garcia’s defense, however, is not without merit. He explained that while he had worked for many years dutifully monitoring the city’s water treatment plant, the water board eventually came under the control of higher-ups who loathed him for his Socialist politics and refused to assign him any responsibilities. He found this situation so demoralizing that he was eventually obliged to seek clinical help for depression. Finally, and with the concurrence of his therapist, he decided that rather than just continue to sit around all day pretending to look busy, he would convince the water board he was being supervised by the municipality, and the municipality that he was being supervised by the water board, check in if there was a problem, but otherwise just go home and do something useful with his life.[6]

Similar stories about the public sector appear at regular intervals. One popular one is about postal carriers who decide that rather than delivering the mail, they prefer to dump it in closets, sheds, or Dumpsters—with the result that tons of letters and packages pile up for years without anyone figuring it out.[7] David Foster Wallace’s novel The Pale King, about life inside an Internal Revenue Service office in Peoria, Illinois, goes even further: it culminates in an auditor dying at his desk and remaining propped in his chair for days before anyone notices. This seems pure absurdist caricature, but in 2002, something almost exactly like this did happen in Helsinki. A Finnish tax auditor working in a closed office sat dead at his desk for more than forty-eight hours while thirty colleagues carried on around him. “People thought he wanted to work in peace, and no one disturbed him,” remarked his supervisor—which, if you think about it, is actually rather thoughtful.[8]

It’s stories like these, of course, that inspire politicians all over the world to call for a larger role for the private sector—where, it is always claimed, such abuses would not occur. And while it is true so far that we have not heard any stories of FedEx or UPS employees stowing their parcels in garden sheds, privatization generates its own, often much less genteel, varieties of madness—as Kurt’s story shows. I need hardly point out the irony in the fact that Kurt was, ultimately, working for the German military. The German military has been accused of many things over the years, but inefficiency was rarely one of them. Still, a rising tide of bullshit soils all boats. In the twenty-first century, even panzer divisions have come to be surrounded by a vast penumbra of sub-, sub-sub-, and sub-sub-subcontractors; tank commanders are obliged to perform complex and exotic bureaucratic rituals in order to move equipment from one room to another, even as those providing the paperwork secretly post elaborate complaints to blogs about how idiotic the whole thing is.

If these cases are anything to go by, the main difference between the public and private sectors is not that either is more, or less, likely to generate pointless work. It does not even necessarily lie in the kind of pointless work each tends to generate. The main difference is that pointless work in the private sector is likely to be far more closely supervised. This is not always the case. As we’ll learn, the number of employees of banks, pharmaceutical companies, and engineering firms allowed to spend most of their time updating their Facebook profiles is surprisingly high. Still, in the private sector, there are limits. If Kurt were to simply walk off the job to take up the study of his favorite seventeenth-century Jewish philosopher, he would be swiftly relieved of his position. If the Cadiz Water Board had been privatized, Joaquin Garcia might well still have been deprived of responsibilities by managers who disliked him, but he would have been expected to sit at his desk and pretend to work every day anyway, or find alternate employment.

I will leave readers to decide for themselves whether such a state of affairs should be considered an improvement.

why a mafia hit man is not a good example of a bullshit job

To recap: what I am calling “bullshit jobs” are jobs that are primarily or entirely made up of tasks that the person doing that job considers to be pointless, unnecessary, or even pernicious. Jobs that, were they to disappear, would make no difference whatsoever. Above all, these are jobs that the holders themselves feel should not exist.

Contemporary capitalism seems riddled with such jobs. As I mentioned in the preface, a YouGov poll found that in the United Kingdom only 50 percent of those who had full-time jobs were entirely sure their job made any sort of meaningful contribution to the world, and 37 percent were quite sure it did not. A poll by the firm Schouten & Nelissen carried out in Holland put the latter number as high as 40 percent.[9] If you think about it, these are staggering statistics. After all, a very large percentage of jobs involves doing things that no one could possibly see as pointless. One must assume that the percentage of nurses, bus drivers, dentists, street cleaners, farmers, music teachers, repairmen, gardeners, firefighters, set designers, plumbers, journalists, safety inspectors, musicians, tailors, and school crossing guards who checked “no” to the question “Does your job make any meaningful difference in the world?” was approximately zero. My own research suggests that store clerks, restaurant workers, and other low-level service providers rarely see themselves as having bullshit jobs, either. Many service workers hate their jobs; but even those who do are aware that what they do does make some sort of meaningful difference in the world.[10]

So if 37 percent to 40 percent of a country’s working population insist their work makes no difference whatsoever, and another substantial chunk suspects that it might not, one can only conclude that any office worker who one might suspect secretly believes themselves to have a bullshit job does, indeed, believe this.

The main thing I would like to do in this first chapter is to define what I mean by bullshit jobs; in the next chapter I will lay out a typology of what I believe the main varieties of bullshit jobs to be. This will open the way, in later chapters, to considering how bullshit jobs come about, why they have come to be so prevalent, and to considering their psychological, social, and political effects. I am convinced these effects are deeply insidious. We have created societies where much of the population, trapped in useless employment, have come to resent and despise equally those who do the most useful work in society, and those who do no paid work at all. But before we can analyze this situation, it will be necessary to address some potential objections.

The reader may have noticed a certain ambiguity in my initial definition. I describe bullshit jobs as involving tasks the holder considers to be “pointless, unnecessary, or even pernicious.” But, of course, jobs that have no significant effect on the world and jobs that have pernicious effects on the world are hardly the same thing. Most of us would agree that a Mafia hit man does more harm than good in the world, overall; but could you really call Mafia hit man a bullshit job? That just feels somehow wrong.

As Socrates teaches us, when this happens—when our own definitions produce results that seem intuitively wrong to us—it’s because we’re not aware of what we really think. (Hence, he suggests that the true role of philosophers is to tell people what they already know but don’t realize that they know. One could argue that anthropologists like myself do something similar.) The phrase “bullshit jobs” clearly strikes a chord with many people. It makes sense to them in some way. This means they have, at least on some sort of tacit intuitive level, criteria in their minds that allow them to say “That was such a bullshit job” or “That one was bad, but I wouldn’t say it was exactly bullshit.” Many people with pernicious jobs feel the phrase fits them; others clearly don’t. The best way to tease out what those criteria are is to examine borderline cases.

So, why does it feel wrong to say a hit man has a bullshit job?[11]

I suspect there are multiple reasons, but one is that the Mafia hit man (unlike, say, a foreign currency speculator or a brand marketing researcher) is unlikely to make false claims. True, a mafioso will usually claim he is merely a “businessman.” But insofar as he is willing to own up to the nature of his actual occupation at all, he will tend to be pretty up front about what he does. He is unlikely to pretend his work is in any way beneficial to society, even to the extent of insisting it contributes to the success of a team that’s providing some useful product or service (drugs, prostitution, and so on), or if he does, the pretense is likely to be paper thin.

This allows us to refine our definition. Bullshit jobs are not just jobs that are useless or pernicious; typically, there has to be some degree of pretense and fraud involved as well. The jobholder must feel obliged to pretend that there is, in fact, a good reason why her job exists, even if, privately, she finds such claims ridiculous. There has to be some kind of gap between pretense and reality. (This makes sense etymologically[12]: “bullshitting” is, after all, a form of dishonesty.[13])

So we might make a second pass:

Provisional Definition 2: a bullshit job is a form of employment that is so completely pointless, unnecessary, or pernicious that even the employee cannot justify its existence even though the employee feels obliged to pretend that this is not the case.

Of course, there is another reason why hit man should not be considered a bullshit job. The hit man is not personally convinced his job should not exist. Most mafiosi believe they are part of an ancient and honorable tradition that is a value in its own right, whether or not it contributes to the larger social good. This is, incidentally, the reason why “feudal overlord” is not a bullshit job, either. Kings, earls, emperors, pashas, emirs, squires, zamindars, landlords, and the like might, arguably, be useless people; many of us would insist (and I would be inclined to agree) that they play pernicious roles in human affairs. But they don’t think so. So unless the king is secretly a Marxist, or a Republican, one can say confidently that “king” is not a bullshit job.

This is a useful point to bear in mind because most people who do a great deal of harm in the world are protected against the knowledge that they do so. Or they allow themselves to believe the endless accretion of paid flunkies and yes-men that inevitably assemble around them to come up with reasons why they are really doing good. (Nowadays, these are sometimes referred to as think tanks.) This is just as true of financial-speculating investment bank CEOs as it is of military strongmen in countries such as North Korea and Azerbaijan. Mafiosi families are unusual perhaps because they make few such pretensions—but in the end, they are just miniature, illicit versions of the same feudal tradition, being originally enforcers for local landlords in Sicily who have over time come to operate on their own hook.[14]

There is one final reason why hit man cannot be considered a bullshit job: it’s not entirely clear that hit man is a “job” in the first place. True, the hit man might well be employed by the local crime boss in some capacity or other. Perhaps the crime boss makes up some dummy security job for him in his casino. In that case, we can definitely say that job is a bullshit job. But he is not receiving a paycheck in his capacity as a hit man.

This point allows us to refine our definition even further. When people speak of bullshit jobs, they are generally referring to employment that involves being paid to work for someone else, either on a waged or salaried basis (most would also include paid consultancies). Obviously, there are many self-employed people who manage to get money from others by means of falsely pretending to provide them with some benefit or service (normally we call them grifters, scam artists, charlatans, or frauds), just as there are self-employed people who get money off others by doing or threatening to do them harm (normally we refer to them as muggers, burglars, extortionists, or thieves). In the first case, at least, we can definitely speak of bullshit, but not of bullshit jobs, because these aren’t “jobs,” properly speaking. A con job is an act, not a profession. So is a Brink’s job. People do sometimes speak of professional burglars, but this is just a way of saying that theft is the burglar’s primary source of income.[15] No one is actually paying the burglar regular wages or a salary to break into people’s homes. For this reason, one cannot say that burglar is, precisely, a job, either.[16]

These considerations allow us to formulate what I think can serve as a final working definition:

Final Working Definition: a bullshit job is a form of paid employment that is so completely pointless, unnecessary, or pernicious that even the employee cannot justify its existence even though, as part of the conditions of employment, the employee feels obliged to pretend that this is not the case.

on the importance of the subjective element, and also, why it can be assumed that those who believe they have bullshit jobs are generally correct

This, I think, is a serviceable definition; good enough, anyway, for the purposes of this book.

The attentive reader may have noticed one remaining ambiguity. The definition is mainly subjective. I define a bullshit job as one that the worker considers to be pointless, unnecessary, or pernicious—but I also suggest that the worker is correct.[17] I’m assuming there is an underlying reality here. One really has to make this assumption because otherwise we’d be stuck with accepting that the exact same job could be bullshit one day and nonbullshit the next, depending on the vagaries of some fickle worker’s mood. All I’m really saying here is that since there is such a thing as social value, as apart from mere market value, but since no one has ever figured out an adequate way to measure it, the worker’s perspective is about as close as one is likely to get to an accurate assessment of the situation.[18]

Often it’s pretty obvious why this should be the case: if an office worker is really spending 80 percent of her time designing cat memes, her coworkers in the next cubicle may or may not be aware of what’s going on, but there’s no way that she is going to be under any illusions about what she’s doing. But even in more complicated cases, where it’s a question of how much the worker really contributes to an organization, I think it’s safe to assume the worker knows best. I’m aware this position will be taken as controversial in certain quarters. Executives and other bigwigs will often insist that most people who work for a large corporation don’t fully understand their contributions, since the big picture can be seen only from the top. I am not saying this is entirely untrue: frequently there are some parts of the larger context that lower-level workers cannot see or simply aren’t told about. This is especially true if the company is up to anything illegal.[19] But it’s been my experience that any underling who works for the same outfit for any length of time—say, a year or two—will normally be taken aside and let in on the company secrets.

True, there are exceptions. Sometimes managers intentionally break up tasks in such a way that the workers don’t really understand how their efforts contribute to the overall enterprise. Banks will often do this. I’ve even heard examples of factories in America where many of the line workers were unaware of what the plant was actually making; though in such cases, it almost always turned out to be because the owners had intentionally hired people who didn’t speak English. Still, in those cases, workers tend to assume that their jobs are useful; they just don’t know how. Generally speaking, I think employees can be expected to know what’s going on in an office or on a shop floor, and, certainly, to understand how their work does, or does not, contribute to the enterprise—at least, better than anybody else.[20] With the higher-ups, that’s not always clear. One frequent theme I encountered in my research was of underlings wondering in effect, “Does my supervisor actually know that I spend eighty percent of my time designing cat memes? Are they just pretending not to notice, or are they actually unaware?” And since the higher up the chain of command you are, the more reason people have to hide things from you, the worse this situation tends to become.

The real sticky problem comes in when it’s a question of whether certain kinds of work (say, telemarketing, market research, consulting) are bullshit—that is, whether they can be said to produce any sort of positive social value. Here, all I’m saying is that it’s best to defer to the judgment of those who do that kind of work. Social value, after all, is largely just what people think it is. In which case, who else is in a better position to judge? In this instance, I’d say: if the preponderance of those engaged in a certain occupation privately believe their work is of no social value, one should proceed along the assumption they are right.[21]

Sticklers will no doubt raise objections here too. They might ask: How can one actually know for sure what the majority of people working in an industry secretly think? And the answer is that obviously, you can’t. Even if it were possible to conduct a poll of lobbyists or financial consultants, it’s not clear how many would give honest answers. When I spoke in broad strokes about useless industries in the original essay, I did so on the assumption that lobbyists and financial consultants are, in fact, largely aware of their uselessness—indeed, that many if not most of them are haunted by the knowledge that nothing of value would be lost to the world were their jobs simply to disappear.

I could be wrong. It is possible that corporate lobbyists or financial consultants genuinely subscribe to a theory of social value that holds their work to be essential to the health and prosperity of the nation. It is possible they therefore sleep securely in their beds, confident that their work is a blessing for everyone around them. I don’t know, but I suspect this is more likely to be true as one moves up the food chain, since it would appear to be a general truth that the more harm a category of powerful people do in the world, the more yes-men and propagandists will tend to accumulate around them, coming up with reasons why they are really doing good—and the more likely it is that at least some of those powerful people will believe them.[22] Corporate lobbyists and financial consultants certainly do seem responsible for a disproportionately large share of the harm done in the world (at least, harm carried out as part of one’s professional duties). Perhaps they really do have to force themselves to believe in what they do.

In that case, finance and lobbying wouldn’t be bullshit jobs at all; they’d actually be more like hit men. At the very, very top of the food chain, this does appear to be the case. I remarked in the original 2013 piece, for instance, that I’d never known a corporate lawyer who didn’t think his or her job was bullshit. But, of course, that’s also a reflection of the sort of corporate lawyers that I’m likely to know: the sort who used to be poet-musicians. But even more significantly: the sort who are not particularly high ranking. It’s my impression that genuinely powerful corporate lawyers think their roles are entirely legitimate. Or perhaps they simply don’t care whether they’re doing good or harm.

At the very top of the financial food chain, that’s certainly the case. In April 2013, by a strange coincidence, I happened to be present at a conference on “Fixing the Banking System for Good” held inside the Philadelphia Federal Reserve, where Jeffrey Sachs, the Columbia University economist most famous for having designed the “shock therapy” reforms applied to the former Soviet Union, had a live-on-video-link session in which he startled everyone by presenting what careful journalists might describe as an “unusually candid” assessment of those in charge of America’s financial institutions. Sachs’s testimony is especially valuable because, as he kept emphasizing, many of these people were quite up front with him because they assumed (not entirely without reason) that he was on their side:

Look, I meet a lot of these people on Wall Street on a regular basis right now… I know them. These are the people I have lunch with. And I am going to put it very bluntly: I regard the moral environment as pathological. [These people] have no responsibility to pay taxes; they have no responsibility to their clients; they have no responsibility to counterparties in transactions. They are tough, greedy, aggressive, and feel absolutely out of control in a quite literal sense, and they have gamed the system to a remarkable extent. They genuinely believe they have a God-given right to take as much money as they possibly can in any way that they can get it, legal or otherwise.

If you look at the campaign contributions, which I happened to do yesterday for another purpose, the financial markets are the number one campaign contributors in the US system now. We have a corrupt politics to the core… both parties are up to their necks in this.

But what it’s led to is this sense of impunity that is really stunning, and you feel it on the individual level right now. And it’s very, very unhealthy, I have waited for four years… five years now to see one figure on Wall Street speak in a moral language. And I’ve have not seen it once.[23]

So there you have it. If Sachs was right—and honestly, who is in a better position to know?—then at the commanding heights of the financial system, we’re not actually talking about bullshit jobs. We’re not even talking about people who have come to believe their own propagandists. Really we’re just talking about a bunch of crooks.

Another distinction that’s important to bear in mind is between jobs that are pointless and jobs that are merely bad. I will refer to the latter as “shit jobs,” since people often do.

The only reason I bring up the matter is because the two are so often confused—which is odd, because they’re in no way similar. In fact, they might almost be considered opposites. Bullshit jobs often pay quite well and tend to offer excellent working conditions. They’re just pointless. Shit jobs are usually not at all bullshit; they typically involve work that needs to be done and is clearly of benefit to society; it’s just that the workers who do them are paid and treated badly.

Some jobs, of course, are intrinsically unpleasant but fulfilling in other ways. (There’s an old joke about the man whose job it was to clean up elephant dung after the circus. No matter what he did, he couldn’t get the smell off his body. He’d change his clothes, wash his hair, scrub himself endlessly, but he still reeked, and women tended to avoid him. An old friend finally asked him, “Why do you do this to yourself? There are so many other jobs you could do.” The man answered, “What? And give up show business!?”) These jobs can be considered neither shit nor bullshit, whatever the content of the work. Other jobs—ordinary cleaning, for example—are in no sense inherently degrading, but they can easily be made so.

The cleaners at my current university, for instance, are treated very badly. As in most universities these days, their work has been outsourced. They are employed not directly by the school but by an agency, the name of which is emblazoned on the purple uniforms they wear. They are paid little, obliged to work with dangerous chemicals that often damage their hands or otherwise force them to have to take time off to recover (for which time they are not compensated), and generally treated with arbitrariness and disrespect. There is no particular reason that cleaners have to be treated in such an abusive fashion. But at the very least, they take some pride in knowing—and, in fact, I can attest, for the most part do take pride in knowing—that buildings do need to be cleaned, and, therefore, without them, the business of the university could not go on.[24]

Shit jobs tend to be blue collar and pay by the hour, whereas bullshit jobs tend to be white collar and salaried. Those who work shit jobs tend to be the object of indignities; they not only work hard but also are held in low esteem for that very reason. But at least they know they’re doing something useful. Those who work bullshit jobs are often surrounded by honor and prestige; they are respected as professionals, well paid, and treated as high achievers—as the sort of people who can be justly proud of what they do. Yet secretly they are aware that they have achieved nothing; they feel they have done nothing to earn the consumer toys with which they fill their lives; they feel it’s all based on a lie—as, indeed, it is.

These are two profoundly different forms of oppression. I certainly wouldn’t want to equate them; few people I know would trade in a pointless middle-management position for a job as a ditchdigger, even if they knew that the ditches really did need to be dug. (I do know people who quit such jobs to become cleaners, though, and are quite happy that they did.) All I wish to emphasize here is that each is indeed oppressive in its own way.[25]

It is also theoretically possible to have a job that is both shit and bullshit. I think it’s fair to say that if one is trying to imagine the worst type of job one could possibly have, it would have to be some kind of combination of the two. Once, while serving time in exile at a Siberian prison camp, Dostoyevsky developed the theory that the worst torture one could possibly devise would be to force someone to endlessly perform an obviously pointless task. Even though convicts sent to Siberia had theoretically been sentenced to “hard labor,” he observed, the work wasn’t actually all that hard. Most peasants worked far harder. But peasants were working at least partly for themselves. In prison camps, the “hardness” of the labor was the fact that the laborer got nothing out of it:

It once came into my head that if it were desired to reduce a man to nothing—to punish him atrociously, to crush him in such a manner that the most hardened murderer would tremble before such a punishment, and take fright beforehand—it would only be necessary to give to his work a character of complete uselessness, even to absurdity.

Hard labor, as it is now carried on, presents no interest to the convict; but it has its utility. The convict makes bricks, digs the earth, builds; and all his occupations have a meaning and an end. Sometimes the prisoner may even take an interest in what he is doing. He then wishes to work more skillfully, more advantageously. But let him be constrained to pour water from one vessel into another, to pound sand, to move a heap of earth from one place to another, and then immediately move it back again, then I am persuaded that at the end of a few days, the prisoner would hang himself or commit a thousand capital crimes, preferring rather to die than endure such humiliation, shame, and torture.[26]

on the common misconception that bullshit jobs are confined largely to the public sector

So far, we have established three broad categories of jobs: useful jobs (which may or may not be shit jobs), bullshit jobs, and a small but ugly penumbra of jobs such as gangsters, slumlords, top corporate lawyers, or hedge fund CEOs, made up of people who are basically just selfish bastards and don’t really pretend to be anything else.[27] In each case, I think it’s fair to trust that those who have these jobs know best which category they belong to. What I’d like to do next, before turning to the typology, is to clear up a few common misconceptions. If you toss out the notion of bullshit jobs to someone who hasn’t heard the term before, that person may assume you’re really talking about shit jobs. But if you clarify, he is likely to fall back on one of two common stereotypes: he may assume you’re talking about government bureaucrats. Or, if he’s a fan of Douglas Adams’s The Hitchhiker’s Guide to the Galaxy, he may assume you’re talking about hairdressers.

Let me deal with the bureaucrats first, since it’s the easiest to address. I doubt anyone would deny that there are plenty of useless bureaucrats in the world. What’s significant to me, though, is that nowadays, useless bureaucrats seem just as rife in the private sector as in the public sector. You are as likely to encounter an exasperating little man in a suit reading out incomprehensible rules and regulations in a bank or mobile phone outlet than in the passport office or zoning board. Even more, public and private bureaucracies have become so increasingly entangled that it’s often very difficult to tell them apart. That’s one reason I started this chapter the way I did, with the story of a man working for a private firm contracting with the German military. Not only did it highlight how wrong it is to assume that bullshit jobs exist largely in government bureaucracies, but also it illustrates how “market reforms” almost invariably create more bureaucracy, not less.[28] As I pointed out in an earlier book, The Utopia of Rules, if you complain about getting some bureaucratic run-around from your bank, bank officials are likely to tell you it’s all the fault of government regulations; but if you research where those regulations actually come from, you’ll likely discover that most of them were written by the bank.

Nonetheless, the assumption that government is necessarily top-heavy with featherbedding and unnecessary levels of administrative hierarchy, while the private sector is lean and mean, is by now so firmly lodged in people’s heads that it seems no amount of evidence will dislodge it.

No doubt some of this misconception is due to memories of countries such as the Soviet Union, which had a policy of full employment and was therefore obliged to make up jobs for everyone whether a need existed or not. This is how the USSR ended up with shops where customers had to go through three different clerks to buy a loaf of bread, or road crews where, at any given moment, two-thirds of the workers were drinking, playing cards, or dozing off. This is always represented as exactly what would never happen under capitalism. The last thing a private firm, competing with other private firms, would do is to hire people it doesn’t actually need. If anything, the usual complaint about capitalism is that it’s too efficient, with private workplaces endlessly hounding employees with constant speed-ups, quotas, and surveillance.

Obviously, I’m not going to deny that the latter is often the case. In fact, the pressure on corporations to downsize and increase efficiency has redoubled since the mergers and acquisitions frenzy of the 1980s. But this pressure has been directed almost exclusively at the people at the bottom of the pyramid, the ones who are actually making, maintaining, fixing, or transporting things. Anyone forced to wear a uniform in the exercise of his daily labors, for instance, is likely to be hard-pressed.[29] FedEx and UPS delivery workers have backbreaking schedules designed with “scientific” efficiency. In the upper echelons of those same companies, things are not the same. We can, if we like, trace this back to the key weakness in the managerial cult of efficiency—its Achilles’ heel, if you will. When managers began trying to come up with scientific studies of the most time- and energy-efficient ways to deploy human labor, they never applied those same techniques to themselves—or if they did, the effect appears to have been the opposite of what they intended. As a result, the same period that saw the most ruthless application of speed-ups and downsizing in the blue-collar sector also brought a rapid multiplication of meaningless managerial and administrative posts in almost all large firms. It’s as if businesses were endlessly trimming the fat on the shop floor and using the resulting savings to acquire even more unnecessary workers in the offices upstairs. (As we’ll see, in some companies, this was literally the case.) The end result was that, just as Socialist regimes had created millions of dummy proletarian jobs, capitalist regimes somehow ended up presiding over the creation of millions of dummy white-collar jobs instead.

We’ll examine how this happened in detail later in the book. For now, let me just emphasize that almost all the dynamics we will be describing happen equally in the public and private sectors, and that this is hardly surprising, considering that today, the two sectors are almost impossible to tell apart.

why hairdressers are a poor example of a bullshit job

If one common reaction is to blame government, another is, oddly, to blame women. Once you put aside the notion that you’re only talking about government bureaucrats, many will assume you must be talking above all about secretaries, receptionists, and various sorts of (typically female) administrative staff. Now, clearly, many such administrative jobs are indeed bullshit by the definition developed here, but the assumption that it’s mainly women who end up in bullshit jobs is not only sexist but also represents, to my mind, a profound ignorance of how most offices actually work. It’s far more likely that the (female) administrative assistant for a (male) vice dean or “Strategic Network Manager” is the only person doing any real work in that office, and that it’s her boss who might as well be lounging around in his office playing World of Warcraft, or very possibly, actually is.

I will return to this dynamic in the next chapter when we examine the role of flunkies; here I will just emphasize that we do have statistical evidence in this regard. While the YouGov survey didn’t break down its results by occupation, which is a shame, it did break them down by gender. The result was to reveal that men are far more likely to feel that their jobs are pointless (42 percent) than women do (32 percent). Again, it seems reasonable to assume that they are right.[30]

Finally, the hairdressers. I’m afraid to say that Douglas Adams has a lot to answer for here. Sometimes it seemed to me that whenever I would propose the notion that a large percentage of the work being done in our society was unnecessary, some man (it was always a man) would pop up and say, “Oh, yes, you mean, like, hairdressers?” Then he would usually make it clear that he was referring to Douglas Adams’s sci-fi comedic novel The Restaurant at the End of the Universe, in which the leaders of a planet called Golgafrincham decide to rid themselves of their most useless inhabitants by claiming, falsely, that the planet is about to be destroyed. To deal with the crisis they create an “Ark Fleet” of three ships, A, B, and C, the first to contain the creative third of the population, the last to include blue-collar workers, and the middle one to contain the useless remainder. All are to be placed in suspended animation and sent to a new world; except that only the B ship is actually built and it is sent on a collision course with the sun. The book’s heroes accidentally find themselves on Ship B, investigating a hall full of millions of space sarcophagi, full of such useless people whom they initially assume to be dead. One begins reading off the plaques next to each sarcophagus:

“It says ‘Golgafrincham Ark Fleet, Ship B, Hold Seven, Telephone Sanitizer, Second Class’—and a serial number.”

“A telephone sanitizer?” said Arthur. “A dead telephone sanitizer?”

“Best kind.”

“But what’s he doing here?”

Ford peered through the top at the figure within.

“Not a lot,” he said, and suddenly flashed one of those grins of his which always made people think he’d been overdoing things recently and should try to get some rest.

He scampered over to another sarcophagus. A moment’s brisk towel work, and he announced:

“This one’s a dead hairdresser. Hoopy!”

The next sarcophagus revealed itself to be the last resting place of an advertising account executive; the one after that contained a secondhand car salesman, third class.[31]

Now, it’s obvious why this story might seem relevant to those who first hear of bullshit jobs, but the list is actually quite odd. For one thing, professional telephone sanitizers don’t really exist,[32] and while advertising executives and used-car salesmen do—and are indeed professions society could arguably be better off without—for some reason, when Douglas Adams aficionados recall the story, it’s always the hairdressers they remember.

I will be honest here. I have no particular bone to pick with Douglas Adams; in fact, I have a fondness for all manifestations of humorous British seventies sci-fi; but nonetheless, I find this particular fantasy alarmingly condescending. First of all, the list is not really a list of useless professions at all. It’s a list of the sort of people a middle-class bohemian living in Islington around that time would find mildly annoying. Does that mean that they deserve to die?[33] Myself, I fantasize about eliminating the jobs, not the people who have to do them. To justify extermination, Adams seems to have intentionally selected people that he thought were not only useless but also could be thought of as embracing or identifying with what they did.

Before moving on, then, let us reflect on the status of hairdressers. Why is a hairdresser not a bullshit job? Well, the most obvious reason is precisely because most hairdressers do not believe it to be one. To cut and style hair makes a demonstrable difference in the world, and the notion that it is unnecessary vanity is purely subjective: Who is to say whose judgment of the intrinsic value of hairstyling is correct? Adams’s first novel, The Hitchhiker’s Guide to the Galaxy, which became something of a cultural phenomenon, was published in 1979. I well remember, as a teenager in New York in that year, observing how small crowds would often gather outside the barbershop on Astor Place to watch punk rockers get elaborate purple mohawks. Was Douglas Adams suggesting those giving them the mohawks also deserved to die, or just those hairdressers whose style sense he did not appreciate? In working-class communities, hair parlors often serve as gathering places; women of a certain age and background are known to spend hours at the neighborhood hair parlor, which becomes a place to swap local news and gossip.[34] It’s hard to escape the impression, though, that in the minds of those who invoke hairdressers as a prime example of a useless job, this is precisely the problem. They seem to be imagining a gaggle of middle-aged women idly gossiping under their metallic helmets while others fuss about making some marginal attempts at beautification on a person who (it is suggested), being too fat, too old, and too working class, will never be attractive no matter what is done to her. It’s basically just snobbery, with a dose of gratuitous sexism thrown in.

Logically, objecting to hairdressers on this basis makes about as much sense as saying running a bowling alley or playing bagpipes is a bullshit job because you personally don’t enjoy bowling or bagpipe music and don’t much like the sort of people who do.

Now, some might feel I am being unfair. How do you know, they might object, that Douglas Adams wasn’t really thinking, not of those who hairdress for the poor, but of those who hairdress for the very rich? What about superposh hairdressers who charge insane amounts of money to make the daughters of financiers or movie executives look odd in some up-to-the-moment fashion? Might they not harbor a secret suspicion that their work is valueless, even pernicious? Would not that then qualify them as having a bullshit job?

In theory, of course, we must allow this could be correct. But let us explore the possibility more deeply. Obviously, there is no objective measure of quality whereby one can say that haircut X is worth $15, haircut Y, $150, and haircut Z, $1,500. In the latter case, most of the time, what the customer is paying for anyway is mainly just the ability to say she paid $1,500 for a haircut, or perhaps that he got his hair done by the same stylist as Kim Kardashian or Tom Cruise. We are speaking of overt displays of wastefulness and extravagance. Now, one could certainly make the argument that there’s a deep structural affinity between wasteful extravagance and bullshit, and theorists of economic psychology from Thorstein Veblen, to Sigmund Freud, to Georges Bataille have pointed out that at the very pinnacle of the wealth pyramid—think here of Donald Trump’s gilded elevators—there is a very thin line between extreme luxury and total crap. (There’s a reason why in dreams, gold is often symbolized by excrement, and vice versa.)

What’s more, there is indeed a long literary tradition—starting with the French writer Émile Zola’s Au Bonheur des Dames (The Ladies’ Delight) (in 1883) and running through innumerable British comedy routines—celebrating the profound feelings of contempt and loathing that merchants and sales staff in retail outlets often feel for both their clients and the products they sell them. If the retail worker genuinely believes that he provides nothing of value to his customers, can we then say that retail worker does, indeed, have a bullshit job? I would say the technical answer, according to our working definition, would have to be yes; but at least according to my own research, the number of retail workers who feel this way is actually quite small. Purveyors of expensive perfumes might think their products are overpriced and their clients are mostly boorish idiots, but they rarely feel the perfume industry itself should be abolished.

My own research indicated that within the service economy, there were only three significant exceptions to this rule: information technology (IT) providers, telemarketers, and sex workers. Many of the first category, and pretty much all of the second, were convinced they were basically engaged in scams. The final example is more complicated and probably moves us into territory that extends beyond the precise confines of “bullshit job” into something more pernicious, but I think it’s worth taking note of nonetheless. While I was conducting research, a number of women wrote to me or told me about their time as pole dancers, Playboy Club bunnies, frequenters of “Sugar Daddy” websites and the like, and suggested that such occupations should be mentioned in my book. The most compelling argument to this effect was from a former exotic dancer, now professor, who made a case that most sex work should be considered a bullshit job because, while she acknowledged that sex work clearly did answer a genuine consumer demand, something was terribly, terribly wrong with any society that effectively tells the vast majority of its female population they are worth more dancing on boxes between the ages of eighteen and twenty-five than they will be at any subsequent point in their lives, whatever their talents or accomplishments. If the same woman can make five times as much money stripping as she could teaching as a world-recognized scholar, could not the stripping job be considered bullshit simply on that basis?[35]

It’s hard to deny the power of her argument. (One might add that the mutual contempt between service provider and service user in the sex industry is often far greater than what one might expect to find in even the fanciest boutique.) The only objection I could really raise here is that her argument might not go far enough. It’s not so much that stripper is a bullshit job, perhaps, but that this situation shows us to be living in a bullshit society.[36]

on the difference between partly bullshit jobs, mostly bullshit jobs, and purely and entirely bullshit jobs

Finally, I must very briefly address the inevitable question: What about jobs that are just partly bullshit?

This is a tough one because there are very few jobs that don’t involve at least a few pointless or idiotic elements. To some degree, this is probably just the inevitable side effect of the workings of any complex organization. Still, it’s clear there is a problem and the problem is getting worse. I don’t think I know anyone who has had the same job for thirty years or more who doesn’t feel that the bullshit quotient has increased over the time he or she has been doing it. I might add that this is certainly true of my own work as a professor. Teachers in higher education spend increasing amounts of time filling out administrative paperwork. This can actually be documented, since one of the pointless tasks we are asked to do (and never used to be asked to do) is to fill out quarterly time allocation surveys in which we record precisely how much time each week we spend on administrative paperwork. All indications suggest that this trend is gathering steam. As the French version of Slate magazine noted in 2013, “la bullshitisation de l’économie n’en est qu’à ses débuts.” (The bullshitization of the economy has only just begun.)[37]